Troubleshooting SPSearch and good practices for moving large files

Every so often I get back into geek mode and roll up the sleeves and get my SharePoint hands dirty. Not so long ago I was assisting a small WSS3 based organisation with a major disk subsystem upgrade (iSCSI SAN) and locally attached storage upgrade, driven by content database growth and a need to convert some sites to site collections. By the end of it all, we had a much better set-up with a much better performing disk subsystem, but I was hit by two problems. One was WSS Search being broken and needing a fix and the other was the appallingly slow speed of copying large files around.

So, let’s talk about fixing broken search and then talk about copying large files.

1. When SPSearch breaks…

The SharePoint install in question was a WSS3 site with SP1, and Search Server 2008 had not been installed. The partition that contained the indexes (F:\) had to be moved to another partition (G:), so to achieve this I used the command

stsadm –o spsearch indexlocation G:\DATA\INDEX

Now, 99.9% of the time this will happily do its thing. But today was the 0.01% of the time when it decided to be difficult. Upon executing this command, I received an RPC error. Unfortunately for me, I was out of time, so I decided to completely re-provision search and start all over again.

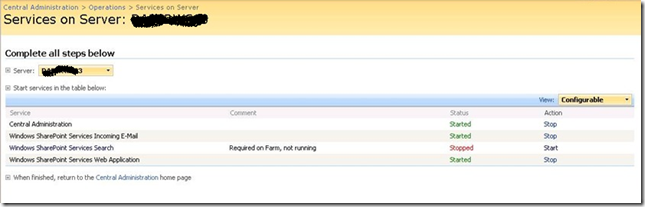

It didn’t matter whether I tried this in Central Administration->Operations->Services on Server, or via the command line below. Both methods would not work.

stsadm -o spsearch -action stop

On each attempt, search would get stuck on unprovisioning (stopping) with a sequence of events in the event log (eventID 2457, 2429 and 2428).

===========================================================================================

Event Source: Windows SharePoint Services 3 Search Event Category: Gatherer Event ID: 2457 Description: The plug-in manager <SPSearch.Indexer.1> cannot be initialized. Context: Application 'Search index file on the search server' Details: The system cannot find the path specified. (0x80070003) =========================================================================================== Event Source: Windows SharePoint Services 3 Search Event Category: Gatherer Event ID: 2429 Description: The plug-in in <SPSearch.Indexer.1> cannot be initialized. Context: Application '93a1818d-a5ec-40e1-82d2-ffd8081e3b6e', Catalog 'Search' Details: The specified object cannot be found. Specify the name of an existing object. (0x80040d06) ===========================================================================================

Event Source: Windows SharePoint Services 3 Search Event Category: Gatherer Event ID: 2428 Description: The gatherer object cannot be initialized. Context: Application 'Search index file on the search server', Catalog 'Search' Details: The specified object cannot be found. Specify the name of an existing object. (0x80040d06)

So, as you can see I was stuck. I couldn’t not clear the existing configuration and the search service would never actually stop. In the end, I started to wonder whether the problem was that my failed attempt to change the index partition had perhaps not reapplied permissions to the new location. To be sure I reapplied permissions using the following STSADM command

psconfig -cmd secureresources

This seemed to do the trick. Re-executing the stsadm spsearch stop command finally did not come up with an error and the service was listed as stopped.

Once stopped, we repartitioned the disks accordingly and now all I had to do was start the damn thing 🙂

Through the Central Administration GUI I clicked Start and re-entered all of the configuration settings, including service accounts and the new index location (G:\DATA\INDEX). After a short time, I received the ever helpful “Unknown Error” error message.

Rather than change debug settings in web.config, I simply checked the SharePoint logs and the event viewer. Now, I had a new event in the logs.

Event Type: Warning Event Source: Windows SharePoint Services 3 Search Event Category: Gatherer Event ID: 10035 Description: Could not import the registry hive into the registry because it does not exist in the configuration database. Context: Application '93a1818d-a5ec-40e1-82d2-ffd8081e3b6e'

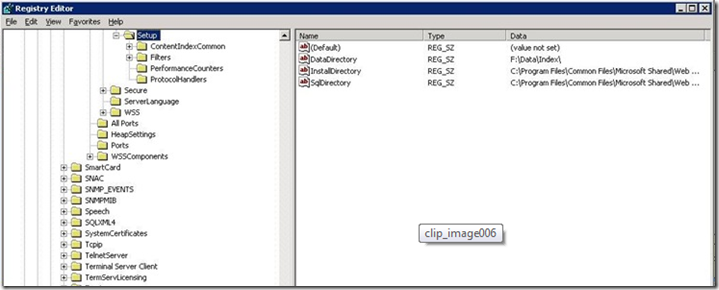

Hmm… It suggests a registry issue, so I checked the registry.

Although the error message really made no sense to me, checking the registry turned out to be the key to solving this mystery. If you look carefully in the above screenshot, note that the registry key DataDirectory was set to “F:\DATA\INDEX”.

I was surprised at this, because I had re-provisioned the SPSearch to use the new location (G:\DATA\INDEX). I would have thought that changing the default index location would alter the value of this key. A delve into the ULS logs showed events like this.

STSADM.EXE (0x0B38) 0x0830 Search Server Common MS Search Administration 95k1 High WSS Search index move: Changing index location from ‘F:\data\index’ to ‘G:\data\index’.

STSADM.EXE (0x0B38) 0x0830 Search Server Common MS Search Administration 95k2 High WSS Search index move: Index location changed to ‘G:\data\index’.

STSADM.EXE (0x0B38) 0x0830 Search Server Common MS Search Administration 0 High CopyIndexFiles: Source directory ‘F:\data\index\93a1818d-a5ec-40e1-82d2-ffd8081e3b6e’ not found for application ’93a1818d-a5ec-40e1-82d2-ffd8081e3b6e’.

STSADM.EXE (0x0F10) 0x1558 Windows SharePoint Services Topology 8xqz Medium Updating SPPersistedObject SPSearchServiceInstance Parent=SPServer Name=DAPERWS03. Version: 218342 Ensure: 0, HashCode: 54267293, Id: 305c06d7-ec6d-465a-98be-1eafe64d8752, Stack: at Microsoft.SharePoint.Administration.SPPersistedObject.Update() at Microsoft.SharePoint.Administration.SPServiceInstance.Update() at Microsoft.SharePoint.Search.Administration.SPSearchServiceInstance.Update() at Microsoft.Search.Administration.CommandLine.ActionParameter.Run(StringBuilder& output) at Microsoft.SharePoint.Search.Administration.CommandLine.SPSearch.Execute() at Microsoft.Search.Administration.CommandLine.CommandBase.Run(String command, StringDictionary keyValues, String& output) at Microsoft.SharePoint.StsAdmin.SPStsAdmin.RunOperation(SPGlobalAdmin globalAdmin, String st…

mssearch.exe (0x1654) 0x1694 Search Server Common IDXPIPlugin 0 Monitorable CTripoliPiMgr::InitializeNew – _CopyNoiseFiles returned 0x80070003 – File:d:\office\source\search\ytrip\search\tripoliplugin\tripolipimgr.cxx Line:519

mssearch.exe (0x1654) 0x1694 Search Server Common Exceptions 0 Monitorable <Exception><HR>0x80070003</HR><eip>0000000001D4127F</eip><module>d:\office\source\search\ytrip\search\tripoliplugin\tripolipimgr.cxx</module><line>520</line></Exception>

Note the second last line above (marked bold and italic). It showed that a function called CopyNoiseFiles returned a code of 0x8007003. This code happens to be “The system cannot find the path specified,” so it appears something is missing.

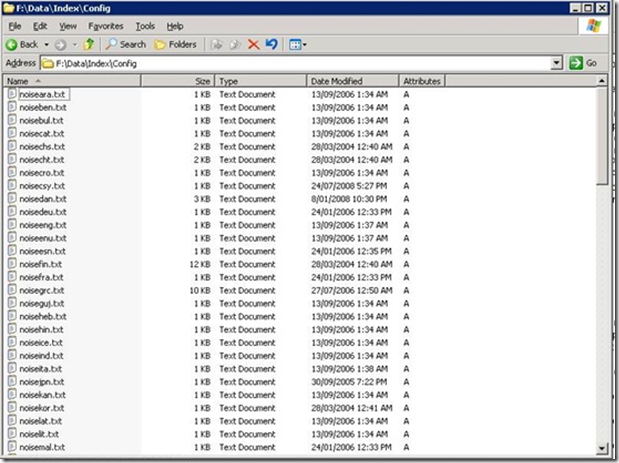

It then dawned on me. Perhaps the SharePoint installer puts some files into the initially configured index location and despite moving the index to another location, SharePoint still looks to this original location for some necessary files. To test this, I loaded up a blank Windows 2003 VM and installed SharePoint SP1 *without* running the configuration wizard. When I looked in the location of the index files, sure enough – there are some files as shown below.

It turned out that during our disk reconfiguration, the path of F:\DATA\INDEX no longer existed. So I recreated the path specified in the registry (F:\DATA\INDEX) and copied the contents of the CONFIG folder from my fresh VM install. I then started the search service from Central Administration and… bingo! Search finally started successfully…Wohoo!

Now that I had search successfully provisioned, I re-ran the command to change the index location to G:\DATA\INDEX and then started a full crawl.

C:\>stsadm -o spsearch -indexlocation G:\DATA\INDEX

Operation completed successfully.

C:\>stsadm -o spsearch -action fullcrawlstart

Operation completed successfully.

I then checked the event logs and now it seems we are cooking with gas!

Event Type: Information Event Source: Windows SharePoint Services 3 Search Event Category: Gatherer Event ID: 10045 Description: Successfully imported the application configuration snapshot into the registry. Context: Application '93a1818d-a5ec-40e1-82d2-ffd8081e3b6e' Event Type: Information Event Source: Windows SharePoint Services 3 Search Event Category: Gatherer Event ID: 10044 Description: Successfully stored the application configuration registry snapshot in the database. Context: Application 'Serve search queries over help content'

As a final check, I re-examined the registry and noted that the DataDirectory key had not changed to reflect G:\. Bear that in mind when moving your index to another location. The original path may still be referred to in the configuration.

2. There are RAID cards and there are RAID cards

Since part of the upgrade work was to improve disk performance, we had to detach databases and move them around while we upgraded the disk infrastructure and repartitioned existing disk arrays. The server had an on-board Intel RAID controller with two arrays configured. One was a two-disk RAID 0 SCSI and the other was a three-disk RAID 5 SATA array. The performance of the RAID 5 SATA had always been crap – even crappier than you would expect from onboard RAID 5. When I say crap, I am talking around 35 megabytes per second transfer rate – even on the two-disk SCSI RAID 0 array.

Now, 35MB/sec isn’t great, but not completely intolerable. But, what made this much, much worse however, was the extreme slowness in copying large files (ie >4GB). When trying to copy files like this to the array, the throughput dropped to as low as 2MBs.

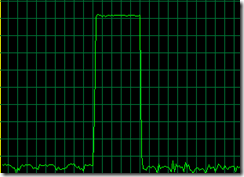

No matter whether it was Windows Explorer drag and drop or a command line utility like ROBOCOPY, the behaviour was the same. Throughput would be terrific for around 60 seconds, and then it would drop as shown below.

My client called the server vendor and was advised to purchase 4 SCSI disks to replace the SATA’s. Apparently the poor SATA performance was actually because SCSI and SATA were mixed on the same RAID bard and bus. That was a no-no.

Sounded plausible, but of course, after replacing the RAID 5 with the SCSI disks, there was no significant improvement in disk throughput at all. The performance of large files still reflected the pattern illustrated in the screenshot above.

Monitoring disk queue length on the new RAID array showed that disk queues were off the planet in terms of within normal boundaries. Now, I know that some people view disk queue length as a bit of an urban myth in terms of disk performance, but copying the same files to the iSCSI SAN yielded a throughput rate of around 95MBs and the disk queue value rarely spiked above 2.

Damn! My client wasn’t impressed with his well known server vendor! Not only does the onboard RAID card have average to crappy performance to begin with, RAID 5 with large files makes it positively useless.

Fun with buffers

To me, this smelt like a buffer type of issue. Imagine you are pouring sand into a bucket and the bucket has a hole in it. If you pour sand into the bucket at a faster rate than the hole allows sand to pour out, then eventually you will overflow the bucket. I suspected this sort of thing was happening here. The periods of high throughput were when the bucket was empty and the sand filled it fast. Then the bucket filled up and things slowed to a crawl while all of that sand passed through the metaphorical hole in the bottom. Once the bucket emptied, there was another all-too-brief burst of throughput as it filled quickly again.

I soon found a terrific article from EPS Windows Server Performance Team that explain what was going on very clearly.

Most file copy utilities like Robocopy or Xcopy call API functions that try and improve performance by keeping data in a buffer. The idea is that files that are changed or accessed frequently can be pulled from the buffer, thereby improving performance and responsiveness. But there is a trade-off. Adding this cache layer introduces an overhead in creating this buffer in the first place. If you are never going to access to copy this file again, adding it to the buffer is actually a bad idea.

Now imagine a huge file. Not only do you have the buffer overhead, but you now are also filling the buffer (and forcing it to be flushed), over and over again.

With a large file, you are actually better off avoiding the buffer altogether and doing a raw file copy. Any large file on a slow RAID card will still take time, but it’s a heck of a lot quicker than when combined with the buffering overhead.

Raw file copy methods

In the aforementioned article from the Microsoft EPS Server performance team, they suggest ESEUTIL as an alternative method. I hope they don’t mind me quoting them…

For copying files around the network that are very large, my copy utility of choice is ESEUTIL which is one of the database utilities provided with Exchange. To get ESEUTIL working on a non-Exchange server, you just need to copy the ESEUTIL.EXE and ESE.DLL from your Exchange server to a folder on your client machine. It’s that easy. There are x86 & x64 versions of ESEUTIL, so make sure you use the right version for your operating system. The syntax for ESEUTIL is very simple: eseutil /y <srcfile> /d <destfile>. Of course, since we’re using command line syntax – we can use ESEUTIL in batch files or scripts. ESEUTIL is dependent on the Visual C++ Runtime Library which is available as a redistributable package

I found an alternative to this, however, that proved its worth to me. It is called Teracopy and we tried the free edition to see what difference it would make in terms of copy times. As it happens, the difference was significant and the time taken to transfer large files around was reduced by a factor of 5. Teracopy also produced a nice running summary of the copy thoughput in MB/sec.The product definitely proved its worth and at a whopping $15 bucks, is not going to break the bank.

So, if you are doing any sort of large file copies and your underlying disk subsystem is not overly fast, then I recommend taking a look at this product. Believe me, it will save you a heap of time.

3. Test your throughput

A final note about this saga. Anyone who deals with SQL Server will have likely read articles about best practice disk configuration in terms of splitting data/logs/backups to different disk arrays to maximise throughput. This client had done this, but since Teracopy gave us nice throughput stats, we took the opportunity to test the read/write performance of all disk subsystems and it turned out that putting ALL data onto the SAN array had significantly better performance than using any of the onboard arrays.

This meant that the by-the-book configuration was hamstrung by a poorly performing onboard RAID controller and even if the idea of using separate disks/spindles seemed logical, the cold hard facts of direct throughput testing proved otherwise.

After reconfiguring the environment to leverage this fact, the difference in response time of WSS, when performing bulk uploads was immediately noticeable.

The moral of the story?

If you are a smaller organisation and can’t afford the high end server gear like Compaq/IBM, then take the time to run throughput tests before you go to production. The results may surprise you.

Thanks for reading

Paul Culmsee

0 Comments on “Troubleshooting SPSearch and good practices for moving large files”