SQL God? No… I just know how to do a maintenance plan

I am working on an essay about IT complexity at the moment, and one thing that sprung into my mind while thinking about this, is the fact that many of my clients seem to think I am some sort of SQL Server guru.

There are two sad realities inferred by this.

Firstly, I am far from a SQL Server god. Yes I have experience with it, but the only reason people think I’m any good at it stems from the general lack of knowledge that they have about the product. Often all I have to do is waltz in with my Michael Buble like smooth charm and recommend a maintenance plan be set up and I am instantly the guy to talk to in all things SQL.

The truth of the matter is that I’m not fit to lick the boots of a skilled DBA. But like all former system administrators/infrastructure managers who have had to deal with the pressure and consequences of downtime, irrespective of the product, I developed a reflex to learn what I need to to cover my butt. Often I didn’t care less how a product operated from the end-user perspective. All I cared about was how it hung together so I could recover it when it inevitably failed in some way.

The second sad reality, is that a large percentage of sites that I visit, have poorly configured SQL Server boxes on a number of levels. This isn’t necessarily the fault of the overworked support staff as SQL Server is just one of the many IT devices and services that they have to maintain and they are simply too stretched to give SQL the sort of attention it needs.

Sad reality = Risk to the organisation

SQL Server underpins many products in the Microsoft ecosystem and often many 3rd party products ship with the free edition of SQL Server as a back-end data store. I suspect that this results in many administrators simply leaving SQL Server to "do its thing" and focus higher up (or lower down) the application stack. (Developers often make the same mistake too and write poorly optimised SQL code).

The thing that sucks about being a system administrator or infrastructure person is that when things are going well, you are essentially invisible to the success of the organisational machine. Then when something goes down, people scream at you to get it back again. Many system administrators are also perpetually busy, and therefore it is common to see corners cut in terms of overall quality around infrastructure management.

The reality for most organisations is that SQL Server is likely to be one of your most critical components its infrastructure even before SharePoint is added to the mix. It likely underpins various line of business tools, and a prolonged outage is likely to hurt. But to paint with a *really* generalised brush, users still spend a lot of their time working with stuff on the traditional file server and email. So if a line of business application is down due to a dead SQL box, one could argue that your user base is still somewhat productive if file/print and email still works.

But as soon as you add SharePoint to the SQL equation (and especially if you use SharePoint in a document central collaboration scenario), then SQL becomes a real focal-point. Now if SQL goes down, you are down to basic email because you have lost a core component that provides your file services, as well as your line of business applications. If you view this from a productivity standpoint, then the cost of downtime is now significantly higher as a result.

Be Afraid…

In my travels, I have consulted to and work with quite a variety of organisations, large and small, across most vertical markets and in various regional (and worldwide) locations. Despite the variety of organisations, I see the same sort of stuff over and over again. In fact, seeing a well set-up and managed SQL Server is really the exception rather than the norm.

Examples of the problems that I commonly see around SQL are:

Administrator/sa privilege abuse

This issue comes in two forms. The first one is when all of the SQL Service accounts run using an account with "local administrator", or worse, "Domain Administrator" privilege.

The second form is when applications access databases using the ‘sa’ account or a local/domain administrative account.

Both of these drive me nuts because it either reflects a lack of security awareness or a poor security posture. Unsurprisingly perhaps, when I find this setting at client sites, there is a very good chance that the SharePoint service accounts (and many other services) also run with far too much privilege. Perhaps the effects of SQL Slammer has been long forgotten eh?

In any event, the risk profile for this sort of setup for me jumps significantly, not because I believe that the SQL box is about to be hacked, but because it tells me so much more about the organisations awareness and acumen around the value of their information assets. Adding SharePoint to this mix? Sheesh! Why not attend a Michael Bolton concert instead? Both will hurt you, but the latter you can recover from.

No maintenance plans (or ancient ones)

…and people wonder why it’s slow.

This is a very serious issue, and I would have to say that more than half of the sites that I visit do not have any SQL Server maintenance plans.

If you are responsible for maintaining a SharePoint or SQL infrastructure and do not know what a maintenance plan is, then in all seriousness, I urge you to read up on them and check the example screengrab below. As I mention in this article, maintenance plans are the SQL equivalent of your very own personal fitness trainer who makes sure that you go to the gym, do your push-ups and maintain a healthy lifestyle. You use maintenance plans to perform integrity checks of your databases, optimise their performance, run backups and various other tasks. A SQL Server without a maintenance plan is like the band Queen without Freddie Mercury. Sure it will keep going, but it will provide a sub-optimal user experience.

The other big problem area is where there actually *are* maintenance plans, but they were set up in an age before time and have gathered dust ever since. They typically do not reflect current reality and more recent databases are not included in it. Often, although defined, they do not run because service accounts have changed, or the SQL Agent is stopped. This scenario is arguably worse that no maintenance plan at all, as it can lead to a false sense of security.

General "Baggage"

Another huge issue that I see all to often is "baggage". Often I am called in to manage a collaborative SharePoint implementation and the "SQL box" underpinning this critical service is in a mess from years of neglect and the client expects to drop SharePoint onto it. Typical examples? How about when SQL Server has a large list of databases, that nobody can identify, due to poor naming and a lack of change control/configuration management. Often I will see databases that were ‘temporarily’ created for a restore operation – only the restore was in 2006 and the database has sat there ever since.

Another telltale sign of baggage is to count the number of SQL jobs defined on the server. If there are none at all, then you know its a potentially suspect install. But if there are dozens of them in an error state with ambiguous names that make it difficult to work out what they actually do, then you know it is totally a suspect install! So often do I see SQL jobs listed that have long failed with no-one noticing because operators and alerts have not been set up (which I will get to next).

Another common occurrence I see in relation to failed database jobs is because they are set to run using the credentials of *end-users*, often developers. The very fact that jobs are defined using the personal account of a user implies that the user has some degree of privileged access to SQL Server. Of course, these same people will change their passwords 6 weeks later and then wonder why their account gets locked out from time to time because the job they set-up is long forgotten yet trying to execute in vain on its schedule.

One has to ask, would you trust your SharePoint service (and potentially your business) to a server in such a state? SharePoint database tend to be pretty darn big compared to most databases and if it is clear the SQL box has been poorly managed until now, what sort of assurance is there that it will be managed any better going forward?

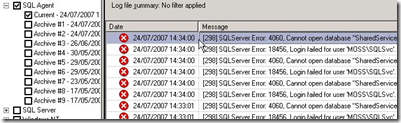

No alerting or decent logging

SQL is perfectly capable of providing very detailed auditing, logging and alerting of problems and exception type conditions. But in my experience, it is very rare to see Database Mail and SQL Operators/Alerts set up.

At the very least, if a maintenance plan job fails (such as a database integrity check), you would think it would be prudent to be informed about it no?

So if you have never set up operators and alerts, then do yourself a favour and make sure that any alert with a severity of 16 and up is sent to an operator as this indicates a backup failure. In fact, any alerts from severity 16 and up should ideally be alerted to an operator as the general rule is the higher the severity, the nastier the error.

For a good write-up of the steps to enable email notification, operators and alerts, I recommend Jeremy Kadlec’s article.

Never tested SQL backup agent

Okay I will admit it. I don’t trust backup agents. At various points in my dim, dark past I have been burnt by backup agents behaving badly. I still use them and test them out of course. However, past experience has taught me to always adhere to the principle of giving myself as many options as possible in the event of a disaster recovery situation.

Unfortunately, it is not hard to find backup/recovery horror stories where backups failures have gone unnoticed for months, only to be found the hard way (i.e when a catastrophic failure hits and a restore is needed). As recently as last month I was called in to a catastrophic restore situation where the much touted (and expensive) enterprise backup software failed to restore a SharePoint web application via its native (and untested) SharePoint agent. The backup software failed with a cryptic code and an even more cryptic log file. Fortunately for all concerned, I had previously set up a maintenance plan at this site and restored it the old fashioned simple way.

Moral? Assume nothing! Always test your backup to make sure that a restore works and that the process is documented.

Be your own SQL god!

There are other areas to look at too, but I have listed enough for you to get the idea. So remember, if you want to be considered a "SQL god" and be cool, then heed my advice. Take a good look at your SQL box. Drop in a maintenance plan or two and just like me, people will think you are some sort of genius 🙂

Thanks for reading

Paul Culmsee

CISSP, MCSE, "SQL God", MCT

Ron Grzywacz’s blog has some good links around this subject of maintenance plans and stuff, so anyone interested should check his post out:

http://blogs.msdn.com/ronalg/archive/2008/08/27/sql-maintenance-for-sharepoint-databases.aspx

Ah good point Jeremy, I forgot to mention the issue with the “rebuild index” task and SharePoint. Information about that “gotcha” can be found at http://www.cleverworkarounds.com/2008/07/09/office-server-search-memory-leak-and-stuck-on-crawling/.

Interesting, SP2 for SQL cures this problem. I actually had a section in the article about lack of patching but took it out because that is a generalised problem, not just with SQL Server.

dbcc shrinkdatabase has made me look like a SQL guru time and time again. Also, why does anyone save transaction logs in development? You can just change to simple recovery. Nobody is going to replay your logs.

Is it complexity of the product or complextity of requirements that causes misunderstanding. I do rather like the description (pictorial) at BusinessBalls, perhaps it will help you also.