Demystifying SharePoint Performance Management Part 6 – The unholy trinity of Latency, IOPS and MBPS

Hi all

Welcome to part 6 on my series in making SharePoint performance management that little more digestible. To recap where we have been, I introduced the series by comparing lead versus lag indicators before launching into an examination of Requests Per Second (RPS) as a performance indicator. I spent 3 posts on RPS and then in the last post, we turned our attention to the notion of latency. We watched a Wiggles Video and then looked at all of the interacting components that work together just to load a SharePoint home page. I spent some time explaining that some forms of latency cannot be reduced because of the laws of physics, but other forms of latency are man made. This is when any one of the interacting components are sub-optimally configured and therefore introduce unnecessary latency into the picture. I then asserted that disk latency was one of the most common area that is ripe for sub-optimal configuration. I then finished that post by looking at how a rotational disk works, the strategies employed to mitigate latency (Cache, RAID, SAN’s etc.)

Now on the note of Cache, RAID and SAN’s Robert Bogue who I mentioned in part 1, has also just published an article on this topic area called Computer Hard Disk Performance – From the Ground Up. You should consider Robert’s article part 5.5 of this series of posts because it expands on what I introduced in the last post and also spans a couple of the things I want to talk about in this one (and goes beyond it too). It is an excellent coverage of many aspects of disk latency and I highly recommend you check it out).

Right! In this post, where will look more closely at latency and understand its relationship with two other commonly cited disk performance measures: IOPS and MBPS. To do so, lets go shopping!

Why groceries help to explain disk performance

Most people dislike having to wait in a line for a check-out at a supermarket and supermarkets know this. So they always try and balance the number of open check-out counters so that they can scale when things are busy, but not pay the operators to standing around when its quiet. Accordingly, it is common to walk into a store when its quiet and only find only one or two check-out counter open, even if the supermarket has a dozen or more of them.

The trend in Australian supermarkets nowadays is to have some modified check-out counters that are labelled as “express.” In these check-outs, you can only use them if you are buying 15 items or less. While the notion of express check-outs has been around forever, the more recent trend is to modify the design of express check-out counters to have very limited counter space and no moving roller that pushes your goods toward the operator. This discourages people with a fully-loaded trolley/cart to use the express lane because there is simply not enough room to unload the goods, have them scanned and put them back in the trolley. Therefore, many more shoppers can go through express counters than regular counters because they all have smaller loads.

This in turn frees up the “regular” check-out counters for shoppers with a large amount of goods. Not only do they have a nice long conveyor belt with plenty of room for shoppers to unload all of their goods onto and rolls to the operator, but often there will be another operator who puts the goods into bags for you as well. Essentially this counter is optimised for people who have a lot of goods.

Now if you were to measure the “performance” of express lanes versus regular lanes, I bet you would see two trends.

- Express lanes would have more shoppers go through them per hour, but less goods overall

- Regular lanes would have more goods go through them per hour, but less shoppers overall

With that in mind, lets now delve back into the world of disk IO and see if the trend holds true there as well.

Disk latency and IOPS

In the last post, I specifically focused on disk latency by pointing out that most of the latency in a rotational hard drive is from rotation time and seek time. Rotation time is time taken for the drive to rotate the disk platter to the data being requested and seek time is how long it takes for the hard drive’s read/write head to then be positioned over that data. Depending on how far the rotation and head have to move, latency can vary. Closely related to disk latency is the notion of I/O per second or “IOPS”. IOPS refer to the maximum number of reads and writes that can be performed on a disk in any given second. If we think about our supermarket metaphor, IOPS is equivalent to the number of shoppers that go through a check-out.

The math behind IOPS and how latency affects it is relatively straightforward. Let’s assume a fixed latency for each IO operation for a moment. If for example, your disk has a large latency… say 25 milliseconds between each IO operation, then you would roughly have 40 IOPS. This is because 1 second = 1000 milliseconds. Divide 1000 by 25 and you get 40. Conversely, if you have 5 milliseconds latency, you would get 200 IOPS (1000 / 5 = 200).

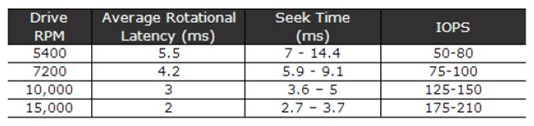

Now if you want to see a more detailed examination of IOPS/ latency and the maths behind it, take a look at an excellent post by Ian Atkin. Below I have listed the disk latency and IOPS figures he posted for different speed disks. Note that a 15k RPM disk came in at around 175-210 IOPS which suggests a typical latency average of between 4.7 and 5.7 milliseconds. (1000/175 = 5.7 and 1000/210 = 4.7). Note: Ian’s article explains in depth the maths behind the average calculation in this section of his post.

The big trolley theory of IOPS…

While that math is convenient, the real world is always different to the theoretical reality I painted above. In the world of shopping, imagine if someone with one or two trolleys full of goods like the picture below, decided to use the express check-out. It would mean that all of the other shoppers have to get annoyed and wait around for this shoppers goods to be scanned, bagged and put back into trolley. The net result of this is a reduced number of shoppers going through the check-out too.

While the inefficiencies of a supermarket is something that is easy to visualise for most people, disk infrastructure is less so. So while the size of our trolley has an impact on how many people come through a check-out, in the disk world, the size of the IO request has precisely the same effect. To demonstrate, I ran a basic test using a utility called SQLIO (which I will properly introduce you to in part 7) on one of my virtual machines. Below is the results of writing data randomly to a 500GB disk. In the first test we wrote to the disk using 64KB writes and in the second test we used 4KB writes. The results are below:

| Size of Write | IOPS Result |

| 64KB | 279 |

| 4KB | 572 |

Clearly, writing 4KB of data over time resulted in a much higher IOPS than when using 64KB of data. But just because there is a higher IOPS for the 4KB write, do you think that is better performance?

Disk latency and MBPS

So far the discussion has been very IOPS focussed. It is now time to rectify this. In terms of the SQLIO test I performed above, there was one other performance result I omitted to show you – the Megabytes per second (MBPS) of each test. I will now add it to the table below:

| Size of Write | IOPS Result | MBPS Result |

| 64KB | 279 | 17.5 |

| 4KB | 572 | 2.25 |

Interesting eh? This additional performance metric paints a completely different picture. In terms of actual data transferred, the 4KB option did only 2.25 megabytes per second whereas the 64KB transferred almost 8 times that amount! Thus, if you were judging performance based on how much data has been transferred, then the 4KB option has been an epic fail. Imagine the response of 500 SharePoint users, loading the latest 30 megabyte annual report from a document library if SharePoint used 4KB reads … Ouch!

So the obvious question is why did a high IOPS equate to a low MBPS?

The answer is latency again (yup – it always comes back to latency). From the time the disk was given the request to the time it completed, writing 4KB simply doesn’t take as long to write as 64KB does. Therefore there are more IOPS that take place with smaller writes. Add to that, the latency from disk rotation and seek time per IO operation and you start to see why there is such a difference. Eric Slack at Storage Switzerland explains with this simple example:

As an illustration, let’s look at two ways a storage system can handle 7.5GB of data. The first is an application that requires reading ten 750MB files, which may take 100 seconds, meaning the transfer rate is 75MB/s and consumes 10 IOPS. The second application requires reading ten thousand 750KB byte files, the same amount of data, but consumes 10,000 IOPS. Given the fact that a typical disk drive provides less than 200 IOPS, the reads from the second application probably won’t get done in the same 100 seconds that the first application did. This is an example of how different ‘workloads’ can require significantly different performance, while using the same capacity of storage.

Now at this point if I haven’t completely lost you, it should become clear that each of the unholy trinity of latency, IOPS and MBPS should not be judged alone. For example, reporting on IOPS without having some idea of the nature of the IO could seriously mislead. To show you just how much, consider the next example…

Sequential vs. Random IO

Now while we are talking about the IO characteristics of applications, two really important point that I have neglected to mention so far is the range of latency and the impact of sequential IO.

The latency math I did above was deliberately simplified. Seek and rotation time are actually across a range of values because sometimes the disk does not have to rotate the spindle/move the head far. The result is a much reduced seek latency and accordingly, increased IOPS and MPBS. Nevertheless, the IO is still considered random.

Taking that one step further, often we are dealing with large sections of contiguous space on the hard disk. Therefore latency is reduced further because there is virtually no seek time involved. This is known as sequential access. Just to show you how much of a difference sequential access makes, I re-ran the two tests above, but this time writing to sequential areas of the disk and not random. With the reduced seek and rotation time, the difference in IOPS and MBPS is significant.

| Size of Write | IOPS Result | MBPS Result |

| 64KB | 2095 | 131 |

| 4KB | 4152 | 16 |

The IOPS and subsequent MBPS has improved significantly from the previous test to the tune of a 750% improvement. Nevertheless, the size of the request and its relation to IOPS and MPBS still holds true. The smaller the size of the IO request being read or written, the more IOPS requests can be sustained, but the less MBPS throughput can be achieved. The reverse then holds true with larger IO requests.

One conclusion that we can draw from this is that specifying IOPS or MBPS alone has the potential to really distort reality if one does not understand the nature of the IO request in terms of its characteristics. For example: Let’s say that you are told your disk infrastructure has to support 5000 IOPS. If you assumed a 4K IO size that is accessed sequentially, then far fewer disks would be required to achieve the result compared to a 64KB IO accessed randomly. In the 64KB case, you would need many disks in an array configuration.

SQL IO Characteristics

So now we get to the million dollar question. What sort of IO characteristics does SQL and SharePoint have?

I will answer this by again quoting from Ian Atkin’s brilliant “Getting the Hang of IOPS” article. Ian makes a really important point that is relevant to SQL and SharePoint in his article which I quote below:

The problem with databases is that database I/O is unlikely to be sequential in nature. One query could ask for some data at the top of a table, and the next query could request data from 100,000 rows down. In fact, consecutive queries might even be for different databases. If we were to look at the disk level whilst such queries are in action, what we’d see is the head zipping back and forth like mad -apparently moving at random as it tries to read and write data in response to the incoming I/O requests.

In the database scenario, the time it takes for each small I/O request to be serviced is dominated by the time it takes the disk heads to travel to the target location and pick up the data. That is to say, the disk’s response time will now dominate our performance.

Okay, so we know that SQL IO is likely to be random in nature. But what about the typical IO size?

Part of the answer to this question can be found in an appropriately titled article called Understanding Pages and Extents. It is appropriate because as far as SQL server database files and indexes are concerned, the fundamental unit of data storage in SQL Server is an 8KB page. The important point for our discussion is that Disk I/O many read and write operations are performed at the page level. Thus, one might assume that 8KB should be the size assumed when working with IOPS calculations because it is possible for SQL to write 8KB to disk at a time.

Unfortunately though, this is not quite correct for a number of reasons. Firstly, eight contiguous 8KB pages are grouped into something called an extent. Given than an extent is a set of 8 pages, the size of an extent is 64KB. SQL Server generally allocates space in a database on a per-extent basis and performs many reads across extents (64KB). Secondly, SQL Server also has a read-ahead algorithm that means SQL will try and proactively retrieve data pages that are going to be used in the immediate future. A read-ahead is typically from 1 to 128 pages for most editions which translates to between 8KB and 1024KB. (for the record, there is a huge amount of conflicting information online about SQL IO characteristics. Bob Door’s highly regarded SQL Server 2000 I/O basics article is the place to go for more gory detail if you find this stuff interesting).

A read-ahead interlude…

Before we get into SharePoint disk characteristics, it is worthwhile mentioning a great article by Linchi Shea called Performance Impact: Some Data Points on Read-Ahead. Linchni did an experiment by disabling read-ahead behaviour in SQL Server and measured the performance of a query on 2 million rows. With read-ahead enabled, it took 80 seconds to complete. Without read-ahead it took 210 seconds. The key difference was the size of the IO requests. Without read-ahead the reads were all 8KB as per page size. With read-ahead, it was over 350KB per read. Linchi makes this conclusion:

Clearly, with read-ahead, SQL Server was able to take advantage of large sized I/Os (e.g. ~350KB per read). Large-sized I/Os are generally much more efficient than smaller-sized I/Os, especially when you actually need all the data read from the storage as was the case with the test query. From the table above, it’s evident that the read throughput was significantly higher when read-ahead was enabled than it was when read-ahead was disabled. In other words, without read-ahead, SQL Server was not pushing the storage I/O subsystem hard enough, contributing to a significantly longer query elapsed time.

So for our purposes, lets accept that there will be a range of IO sizes for read/writes to databases between 8KB to 1024KB. For disk IO performance testing purposes, lets assume that much of this is across the extent boundaries of 64KB. Based on our discussion of latency and MBPS where the larger the IO being worked with, the lower the IOPS, we can now get a better sense of just how much disk might need to be put into an array to achieve a particular IOPS target. As we saw with the examples earlier in this post, 64KB IO sizes result in more latency and lower IOPS. Therefore SharePoint components requiring a lot of IOPS may need some pretty serious disk infrastructure.

SharePoint IO Characteristics

This brings us onto our final point for this post. We need to understand what SharePoint components are IO intensive. The best place to start to determine this is page 29 of Microsoft’s capacity planning guide as it supplies a table listing the general performance requirements of SharePoint components. A similar table exists on page 217 of the Planning guide for server farms and environments for Microsoft SharePoint Server 2010. We will finish this post with a modified table that shows all the SharePoint components listed with medium to high IOPS requirements from the capacity planning guide, along with some of the comments from the server farm planning guide. This gives us some direction as to the SharePoint components that should be given particular focus in any sort of planning. Unfortunately, IOPS requirements are inconsistently written about in both documents. ![]()

|

Service Application |

Service Description |

SQL Server IOPS |

|

SharePoint Foundation Service |

The core SharePoint service for content collaboration. Almost all of the IOPS occurs in SharePoint content databases. IOPS requirements for content databases vary significantly based on how your environment is being used, and how much disk space and how many servers you have. Microsoft recommends that you compare the predicted workload in your environment to one of the solutions that they have tested. I will be covering this in part 8. |

XXX |

|

Logging Service |

The service that records usage and health indicators for monitoring purposes. The Usage database can grow very quickly and require significant IOPS. Use one of the following formulas to estimate the amount of IOPS required: |

XXX |

|

SharePoint Search Service |

The shared service application that provides indexing and querying capabilities. There is a dedicated document that among other things that covers IOPS requirements. For the Crawl database, search requires from 3,500 to 7,000 IOPS. |

XXX |

|

User Profile Service |

The service that powers the social scenarios in SharePoint Server 2010 and enables My Sites, Tagging, Notes, Profile sync with directories and other social capabilities No mention of IOPS is made in both the planning guides |

XXX |

|

Web Analytics Service |

The service that aggregates and stores statistics on the usage characteristics of the farm. The planning guide suggests readers consult a dedicated planning guide for web analytics, but unfortunately no mention of IOPS is made, let alone a recommendation |

XXX |

|

Project Server Service |

The service that enables all the Microsoft Project Server 2010 planning and tracking capabilities in addition to SharePoint Server 2010 No mention of IOPS is made in both the planning guides |

XXX |

|

PowerPivot Service |

The service to display PowerPivot enabled Excel worksheets directly from the browser No mention of IOPS is made in both the planning guides |

XX |

(In case it is not obvious, XX – Indicates medium IOPS cost on the resource and XXX indicates high IOPS cost on the resource)

Conclusion (and coming up next)

Whew! I have to say, that was a fairly big post, but I think we have broken the back of latency, IOPS and MBPS. In the next post, we will put all of this theory to the test by looking at the performance counters that allow us to measure it all, as well as play with a couple of very useful utilities that allow us to simulate different scenarios. Subsequent to that, we will look at these measures from a lead indicator perspective and then examine some of Microsoft’s results from their testing.

Until then, thanks very for reading. As always, comments are greatly appreciated.

Paul Culmsee

0 Comments on “Demystifying SharePoint Performance Management Part 6 – The unholy trinity of Latency, IOPS and MBPS”