Demystifying SharePoint Performance Management Part 5 – So what is latency anyway?

Hi all

Welcome to part 5 in my attempt to make SharePoint performance management a little more accessible. Now that we have dealt with the world of request per second in parts two, three and four, we will focus our attention somewhere different for a post or three.

To set the scene, we are going to take a bit of an end to end look at what it takes to load a SharePoint page. I suspect some readers do not have the full picture on just how many components interact together just to load the SharePoint home page. Things are much more complex in reality than the typical architectural view that adorns SharePoint blogs. A typical SharePoint diagram will list the servers and their roles, but what about all…

- the network gear like routers, switches, reverse proxies and firewalls that are part of the mix?

- the VMWare or HyperV virtual hosts that provide the virtualised servers? And

- the storage area network and its associated paraphernalia that these virtual servers make use of for disk infrastructure?

Make no mistake people, configurations these days are hugely complex and have many moving parts. If any of the various components listed above were to fail or become a bottleneck, the performance of the entire system suffers. Therefore, we need assurance that each component has been optimised to ensure overall function.

This brings us onto the topic of latency. If you are not sure what latency is, I can guarantee that you actually do know. You see, if you have ever experienced a jittery skype call, or your pornography is slow to load, or you have watched a roving reporter respond several seconds after being asked a question from the studio, you are experiencing latency.

Now, the important point to make straight up is that latency is unavoidable because of the laws of physics. Take the example of one of the rovers that NASA sent to Mars. All radio signals to Mars travel at the speed of light (which despite Star Trek’s best efforts to persuade us otherwise, is the absolute speed limit of the universe). The speed of light is around 300,000 kilometres per second and the distance to Mars is currently around 150 million kilometres from Earth. So doing some basic math, we find that it takes a little over 8 minutes for a signal to get from Earth to Mars.

- 150,000,000 / 300,000 = 500 seconds

- 500 / 60 = 8.3 minutes

In this example of latency, no matter what happens, there will always be around 8 minutes of latency between the time an instruction is sent to a rover, to the time it receives and acts on it. Unless Einstein was wrong, this isn’t about to change in a hurry either.

A “lean” view of latency…

Latency is a concept that extends beyond the forces of nature. Let me give you another form of latency that I am sure you have experienced, using Microsoft as the straw man. Let’s say you have a problem with SharePoint and you log a call with Microsoft or your support provider. You call the technical support line and after twiddling your thumbs in the telephone queue for an eternity, you get an inexperienced level 1 tech, who doesn’t understand your problem at all and is hell bent on closing your call anyway because someone higher up in the organisation actually believed that call-time is an indicator of happy customers. You repeat yourself each and every time as your call is slowly routed up the tech support hierarchy. Finally, by the time you get to level 3 or 4, you finally get a good tech who gives you the quick answer you were looking for. The problem is that three weeks have passed to get to this point.

This is also a form of latency. But unlike the first example. It was not the laws of nature this time, but man made laws that caused wasted time. I will call it organisational latency. Addressing this form of latency is a multi billion dollar industry, and keeps an army of organisational/process improvement consultants busy, trying to reduce wastage and improve customer outcomes (now you know what Lean is all about if you hear people taking about it).

So, returning to the SharePoint context – we have a lot of moving parts. We know we cannot alter the laws of physics, but how do we know whether all of the various components are working to their optimum level? Is there any man-made latency that we could reduce or eliminate?

Oh, yes, indeedie there is… and to put some context to it, let’s utilise the musical genius that is the Wiggles. I found that their rendition of the old folk song “Dem bones” serves my purpose nicely.

When you perform the seemingly benign task of requesting a page with your browser, an amazing number of things have to happen. The browser forms a HTTP request and then passes this to the TCPIP stack on your PC, which takes the HTTP request and breaks it up into IP packets. These packets are passed to your network card driver that turns these packets into Ethernet frames and sends them over the wire. Each network device (switch, router, etc.) has to process each frame or IP packet and to work out where to forward it. Eventually the request finds it way to the destination server where the Ethernet frames are stripped, the IP packets are reassembled into the original HTTP request, passed to IIS and SharePoint then acts on it.

Now all I described above was the task of getting a HTTP request from a browser to a server. To see the entire picture, let’s all sing along with the Wiggles shall we? We will assume a two server deployment, utilising a VMware based virtual web front end SharePoint server and a physical SQL Server. Both servers use a Storage Area Network (SAN) for disk. Cue the melody from “Dem Bones”…

- Your PC connects to a distribution switch

- The distribution switch is connected to the core switch

- The core switch connects to the HyperV host

- The HyperV host connects to the virtual Web Front End Virtual Machine

… okay so we have managed to get from our browser to the SharePoint web front end but at this point, the web front end hasn’t really done anything yet. In terms of latency, we had to get through the switches, as well as the virtualisation infrastructure to the virtual SharePoint web front end box. The switches had very little latency at all – probably around 1-2 microseconds (which translates about 0.001 to 0.002 milliseconds) for a network packet to go in one port and out the other. The virtualisation infrastructure also introduced some latency, because there is overhead in running a virtual machine within a physical machine. However, assuming it is well configured and that there aren’t too many virtual machines competing for physical resources like CPU and memory, then that latency is fairly negligible.

Now the virtual web front end server needs to actually deal with the request from your PC. This involves pulling data from the disk infrastructure, so back to the Wiggles we go…

- the Web Front End Virtual Machine connects to the HyperV host

- The HyperV host connects to the SAN Switch

- The SAN Switch connects to the Storage Array

- The Storage Array connects to the Web Front End disk

- The Web Front End disk returns data to the SAN Switch

- The SAN switch returns data to the HyperV host

- The HyperV host returns data to the Web Front End Virtual Machine

…at this point, the web front end server has retrieved any data it needs to from the disk subsystem. There was definitely latency here. The SAN switches have a similar latency to network switches which is negligible, but the physical disks on the SAN are another story (which we will get to soon). But wait a second – that just loads the stuff the web front end server stores or caches locally, as well as writing to the IIS and SharePoint logs. What about all those sexy web parts you have on the front page that aggregate the latest news feed? That stuff needs to pull information from the SharePoint content database on the SQL Server. So let’s continue, now incorporating the connection between the virtual web front end and SQL Server (Remember, I am assuming the SQL box is not virtualised).

- The Web Front End Virtual Machine connects to SQL box (via the network on TCPIP port 1433)

- The SQL Box connects to the SAN Switch

- The San Switch connects to the Storage Array

- The Storage Array connects to the SQL disk

- The SQL disk returns data to the SAN Switch

- The SAN switch connects to the SQL box

- The SQL Box connects to Web Front End Virtual Machine (via the network on TCP port 1433)

- The Web Front End Server returns the page to your PC (via the network on TCP port 80)

Now at this point, non tech oriented readers might be thinking, “Bloody hell! I didn’t realise there were that many interactions.” For you guys… now you know why tech guys are the way they are. Tech guys reading this would know full well that I glossed over many things. For example, I did not include the authentication process in the sequence above, nor did I describe important virtualisation aspects such as VM memory compression. On top of that I glossed big-time over the full gamut of SAN I/O paths.

There is a form of man-made latency that can occur in any of these steps outlined above as a result of the complexity. It is very easy to overlook an important aspect, or to misconfigure something or to assume the default configuration is optimal. In my consulting experience I have seen sub optimal configuration in all of the above touchpoints, but out of all of them, there is one area that is far more likely to have latency issues than any of the other areas: The disk infrastructure.

We will round out this post by taking a fairly high level view at disk infrastructure and why it is latency prone.

Understanding disk latency

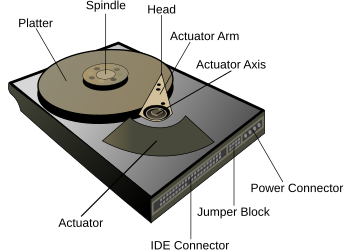

Below is a Wikipedia picture that shows the essential components of most hard drives. This type of hard drive is really not that different from its original design in 1954. It is called a rotational hard drive and the spindle rotates, while the actuator arm moves the head to the right position to read data off the platter. As you can imagine, this happens pretty fast too. Most high end hard drives spin the platter at 15000RPM – dizzying, eh?

But to put disk performance in perspective, consider my previous example of a network switch with a 1-2 microsecond latency to process an Ethernet frame as it transits through one network port to another. By comparison, a modern hard drive takes a hell of a lot longer to do what it needs to do. As a simple example, the time taken just for the drive to rotate the disk platter takes around 2 milliseconds (or 2000 microseconds). Not only is this a staggering 2000 times slower than the network switch but it does not take into account the time it takes for the hard drive’s read/write head to then be positioned over the sector (this is called seek time and can take anywhere between 3 and 15 milliseconds).

This latency clearly is problematic, and vendors compensate by utilising multiple sets of disks and liberal use of cache technology to mitigate it. Imagine putting 10 hard disks together and when data is saved, parts of it is written to each hard disk. Now you have reduced latency because each drive is handling a smaller part instead of a single drive handling it all. It is important to note that we have done nothing about laws of physics latency per single drive (thanks Robert Bogue for pointing that out) , but throughput induced latency has reduced by using them all. It is just like when you are out the supermarket and there are ten check-out operators working instead of 1. The wait times are much shorter because there are more check out operators available to service the request. (This is the essence of RAID technology and should be familiar to most readers).

But there is still more to the latency story than disks taking time to do their thing. At the operating system level, there are various layers and drivers doing stuff. I won’t go too much into this is except to suggest that if the world of the Class Drivers, Port Drivers, Device Miniport Drivers and Disk Subsystems rock your world then Jeff Hughes has a great writeup where he describes the whole Windows disk IO system in detail.

A note on SSD

I would be remiss not to make a point about these newfangled Solid State Drives (You might have heard them mentioned as SSD). This is a newer technology for hard drives that do not employ any moving mechanical components, like platters and movable read/write heads. Solid State Drives have some seriously improved performance in terms of latency, because they store the data in persistent memory. Wikipedia cites that SSD latency is around 0.1 millisecond compared to rotational drives being around 5-10 milliseconds. The downside is that they are more expensive than traditional rotational disks. According to a May 2012 article, SSDs cost approximately US$0.65 per GB whereas traditional hard disks cost about US$0.05 per GB. Expect prices to continue to fall and for them to appear in more and more solutions.

Then there are SANs

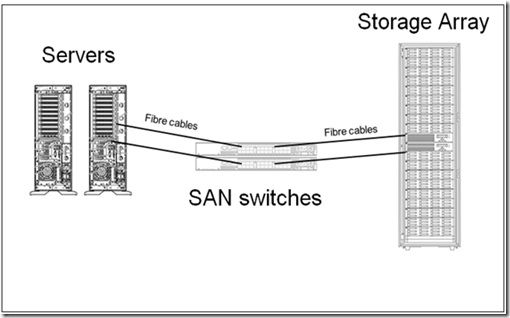

In terms of disk infrastructure and latency aspects, most organisation’s utilise a Storage Area Network (SAN) topology. I previously mentioned the idea of RAID configurations that make use of multiple disks to improve latency (among other things). SANs take the RAID idea and abstracts it further as shown below.

(credit for this image is Orbis solutions: http://orbissolutionsinc.com/blog/tag/storage-arrays/)

I sometimes describe SANs to people as a “fridge full of hard drives connected to multiple servers”. What the above diagram shows is that the disks are physically not connected to the servers that use them. Instead they are connected to a storage array via cables, with a switch or three in between. Each server has some disk space reserved for it on the SAN. So the result is we have one centralised high performing disk array where we can take advantage of all of the disks housed within.

But it’s important to understand here that each time data is read from or written to disk, it passes across those cables and through the switches. Like an internet connection, the SAN switch and cables not only have bandwidth limitations, but are prone to misconfiguration. Imagine 50 servers writing data at the same time. If things are not well configured, the SAN switch infrastructure might become overwhelmed like a freeway during peak hour. Direct attached storage (i.e. – the hard drive or RAID array is plugged into the server directly) typically have a higher bandwidth. This quote from a nice sqlteam.com article on SAN performance explains it well.

For instance, if a server is equipped with two older 1-Gbps host bus adapters (HBAs), its MBps throughput would be capped at about 200MB per second no matter how powerful the rest of the SAN is. Replacing the 1-Gbps HBAs with two newer 4-Gbps HBAs or adding more HBAs may improve the throughput, if the HBAs are indeed the throughput bottleneck. But the SAN drive throughput could also be limited by the maximum throughput of the inter-switch links in the SAN switched fabric. Further down the I/O paths, the front-side adapter ports on the disk array, the cache in the disk array, the disk controllers, and the disk spindles can all become the bottleneck limiting the MBps throughput of the SAN drive.

Conclusion and coming next…

Okay… at this point let’s take a breather. For the tech guys reading this post, none of what I covered may seem particularly earth shattering, but it was important to set the context for a deeper dive into disk latency in the next couple of posts. If you are not normally of the tech persuasion, then I hope that this post has opened your eyes a little to just how complicated deployments can be and accordingly, how hard it can sometimes be to pinpoint latency issues when they occur.

In the next post, we will take a deeper look at disk latency and its relationship to the indicators of IOPS and MBPS. We will then examine tools to measure latency and how to best use it as a lead indicator.

Until then, thanks for reading and be sure to check out my recent business book “The Heretics Guide to Best Practices”

Paul Culmsee

0 Comments on “Demystifying SharePoint Performance Management Part 5 – So what is latency anyway?”